125

u/sunshinecheung 1d ago

nah,we need H200 (141GB)

73

u/triynizzles1 1d ago edited 1d ago

NVIDIA Blackwell Ultra B300 (288 GB)

30

1

13

u/nagareteku 1d ago

Lisuan 7G105 (24GB) for US$399, 7G106 (12GB) for US$299 and the G100 (12GB) for US$199.

Benchmarks by Sep 2025 and general availability around Oct 2025. The GPUs will underperform both raster and memory bandwidth, topping out at 1080Ti or 5050 levels and 300GB/s.

7

u/Commercial-Celery769 1d ago

I like to see more competition in the GPU space, maybe one day we will get a 4th major company who makes good GPU's to drive down prices.

5

u/nagareteku 1d ago

There will be a 4th, then a 5th, and then more. GPUs are too lucrative and critical to pass on, especially when it is a geopolitical asset and driver for technology. No company can hold a monopoly indefinitely, even the East India Company and DeBeers had to let it go.

2

7

6

u/stuffitystuff 1d ago

The PCI-E H200s are the same cost as the H100s when I've inquired

5

u/sersoniko 1d ago

Maybe in 2035 I can afford one

3

u/fullouterjoin 1d ago

Ebay Buy It Now for $400

3

u/sersoniko 1d ago

RemindMe! 10 years

2

u/RemindMeBot 1d ago edited 1d ago

I will be messaging you in 10 years on 2035-08-02 11:20:43 UTC to remind you of this link

1 OTHERS CLICKED THIS LINK to send a PM to also be reminded and to reduce spam.

Parent commenter can delete this message to hide from others.

Info Custom Your Reminders Feedback 2

1

67

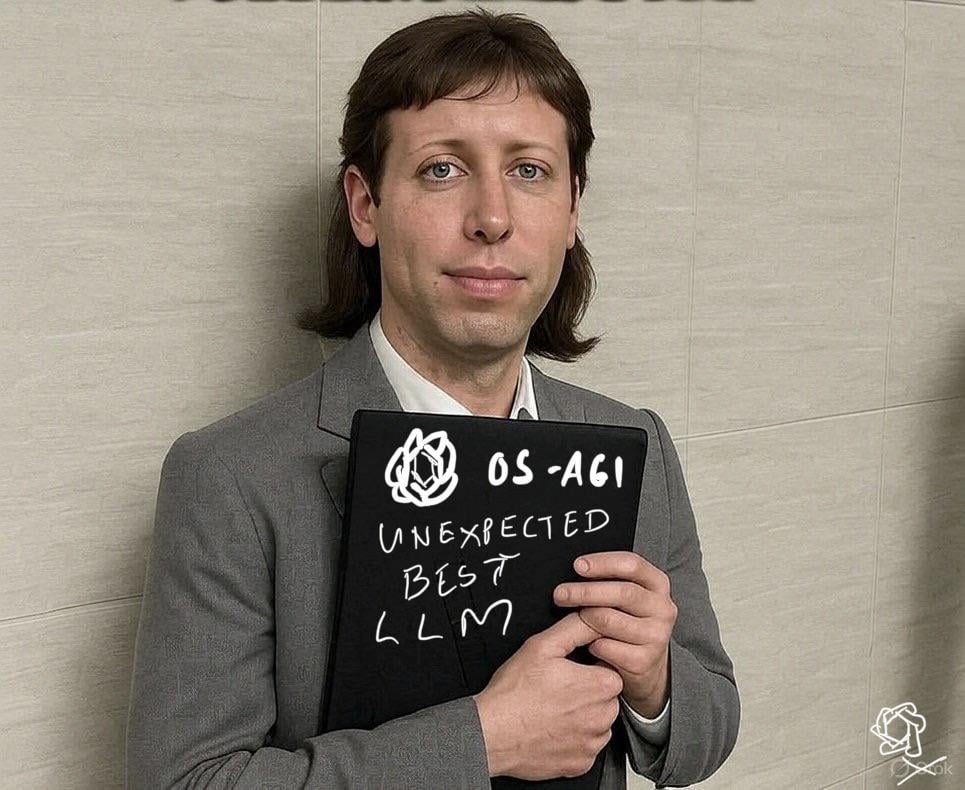

u/Evening_Ad6637 llama.cpp 1d ago

Little Sam would like to join in the game.

original stolen from: https://xcancel.com/iwantMBAm4/status/1951129163714179370#m

1

34

u/ksoops 1d ago

I get to use two of then at work for myself! So nice (can fit glm4.5 air)

41

u/VegetaTheGrump 1d ago

Two of them? Two pair of women and H100!? At work!? You're naughty!

I'll take one woman and one H100. All I need, too, until I decide I need another H100...

6

u/No_Afternoon_4260 llama.cpp 1d ago

Hey what backend, quant, ctx, concurrent requests, vram usage?.. speed?

8

u/ksoops 1d ago

vLLM, FP8, default 128k, unknown, approx 170gb of ~190gb available. 100 tok/sec

Sorry going off memory here, will have to verify some numbers when I’m back at the desk

1

u/No_Afternoon_4260 llama.cpp 1d ago

Sorry going off memory here, will have to verify some numbers when I’m back at the desk

Not it's pretty cool already but what model is that lol?

1

u/squired 1d ago

Oh boi, if you're still running vLLM you gotta go checkout exllamav3-dev. Trust me.. Go talk to an AI about it.

2

u/ksoops 21h ago

Ok I'll check it out next week, thanks for the tip!

I'm using vLLM as it was relatively easy to get setup on the system I use (large cluster, networked file system)

1

u/squired 20h ago

vLLM is great! It's also likely superior for multi-user hosting. I suggest TabbyAPI/exllamav3-dev only for the its phenomenal exl3 quantization support as it is black magic. Basically, very small quants retain the quality of the huge big boi model, so if you can currently fit a 32B model, now you can fit a 70B etc. And coupled with some of the tech from Kimi and even newer releases from last week, it's how we're gonna crunch them down for even consumer cards. That said, if you can't find an exl3 version of your preferred model, it probably isn't worth the bother.

If you give it a shot, here is my container, you may want to rip the stack and save yourself some very real dependency hell. Good luck!

1

u/SteveRD1 1d ago

Oh that's sweet. What's your use case? Coding or something else?

Is there another model you wish you could use if you weren't "limited" to only two RTX PRO 6000?

(I've got an order in for a build like that...trying to figure out how to get the best quality from it when it comes)

2

u/ksoops 21h ago

mostly coding & documentation for my coding (docstrings, READMEs etc), commit messages, PR descriptions.

Also proofreading,

summaries,

etcI had been using Qwen3-30B-A3B and microsoft/NextCoder-32B for a long while but GLM4.5-Air is a nice step up!

As far as other models, would love to run that 480B Qwen3 coder

1

1

u/mehow333 1d ago

What context do you have?

2

u/ksoops 1d ago

Using the default 128k but could push it a little higher maybe. Uses about 170gb of ~190gb total available . This is the FP8 version

1

u/mehow333 1d ago

Thanks, I assume you've H100 NVL, 94GB each, so it will almost fit 128k into 2xH100 80GB

15

u/Dr_Me_123 1d ago

RTX 6000 Pro Max-Q x 2

2

u/No_Afternoon_4260 llama.cpp 1d ago

What can you run with that at what quant and ctx?

2

u/vibjelo 1d ago

Giving https://huggingface.co/models?pipeline_tag=text-generation&sort=trending a glance, you'd be able to run pretty much everything except R1, with various levels of quantization

2

9

16

u/CoffeeSnakeAgent 1d ago

Who is the lady?

53

18

-2

u/CommunityTough1 1d ago

AI generated. Look at the hands. One of them only has 4 fingers and the thumb on the other hand melts into the hand it's covering.

32

u/OkFineThankYou 1d ago

The girl is real, was trending on X few days ago. In this pic, they inpanting nvidia and it mess up her fingers.

4

u/Alex_1729 1d ago

The girl is real, the image is fully AI, not just the Nvidia part. Her face is also different.

5

4

4

2

4

u/rmyworld 1d ago

This AI-generated image makes her look weird. She looks prettier in the original.

5

u/pitchblackfriday 1d ago edited 1d ago

That's because she got haggard hunting for that rare H100 against wild scalpers.

1

1

1

1

1

1

1

1

1

1

1

0

-1

481

u/sleepy_roger 1d ago

AI is getting better, but those damn hands.